May 07, 2025

A Comprehensive Overview of the Databricks AI Capabilities

Author:

Senior MLOps Engineer

Reading time:

14 minutes

In 2025, Databricks offers a comprehensive suite of generative AI tools built on its data lakehouse foundation. This article focuses specifically on these GenAI capabilities.

The GenAI toolkit includes:

- Mosaic AI Gateway: Unified access to both Databricks-native models (like DBRX) and external LLMs

- Vector Search: A fully-integrated vector database optimized for RAG applications

- AI Agent Framework: Tools for creating, deploying, and evaluating AI agents

- SQL and BI Integration: Natural language interfaces for data interaction

These tools integrate seamlessly with Databricks’ governance framework (Unity Catalog), enabling enterprises to implement production-grade AI while maintaining security and compliance.

The following sections then detail each GenAI component, their practical applications across industries, and both advantages and limitations for enterprise implementation.

Databricks platform fundamentals

Before diving into the details of these GenAI capabilities, we briefly cover Databricks platform fundamentals to ensure all readers share a common understanding of the underlying architecture. If you are familiar with those concepts, skip to “GenAI Tools Available in Databricks”.

What is Databricks?

Databricks is an advanced analytics platform operating in the cloud, available from three major providers: Azure, AWS, and Google Cloud. In practice, it serves as a software layer installed on the chosen cloud environment, managing both computation (compute) and data storage.

It’s worth noting that all processing takes place within this environment – Databricks doesn’t function as an independent engine, but as an integrated cloud tool working closely with the cloud infrastructure.

Organizations choose Databricks primarily for its ability to effectively process data with varying structures. The main goal is to transform this data into a format that allows for extracting valuable business insights, from classic BI reports and complex analyses to advanced machine learning and artificial intelligence models.

Databricks architectural components

- Delta Lake

One of the most important elements of Databricks’ architecture is Delta Lake – a layer providing comprehensive data processing in the ETL (extract, transform, load) model. This is where transformation, cleaning, and loading of data into so-called delta tables occurs – optimized tabular structures that enable efficient change management and guarantee high analytical performance.

- Unity Catalog

Above the data layer operates Unity Catalog – a sophisticated permission management system. It allows for a very precise definition of who can use specific tools and data available on the platform and how. This solution is particularly valued by organizations with high security and process auditability requirements.

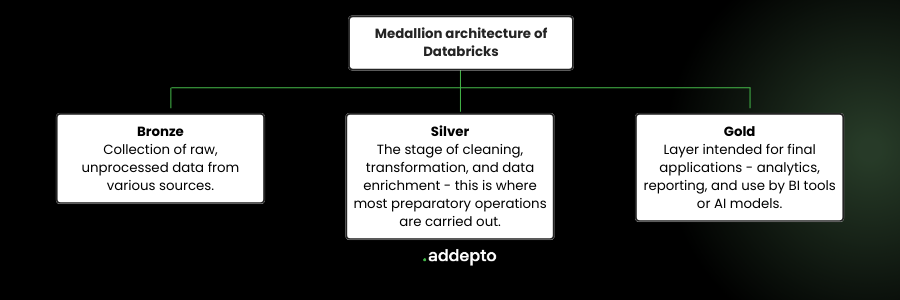

Databricks medallion architecture

Data processing in Databricks often relies on the so-called medallion architecture, dividing the process into three key layers:

-

- Bronze: Collection of raw, unprocessed data from various sources.

- Silver: The stage of cleaning, transformation, and data enrichment – this is where most preparatory operations are carried out.

- Gold: Layer intended for final applications – analytics, reporting, and use by BI tools or AI models.

This approach not only organizes data but also enables the smooth introduction of GenAI tools into existing ETL processes – for example, automatic extraction of information from unstructured sources and their conversion to a format conducive to advanced analytics.

GenAI tools available in Databricks

Understanding the platform’s fundamental mechanisms is crucial because GenAI tools build on these foundations.

Let’s look at the most important AI solutions available in the Databricks ecosystem.

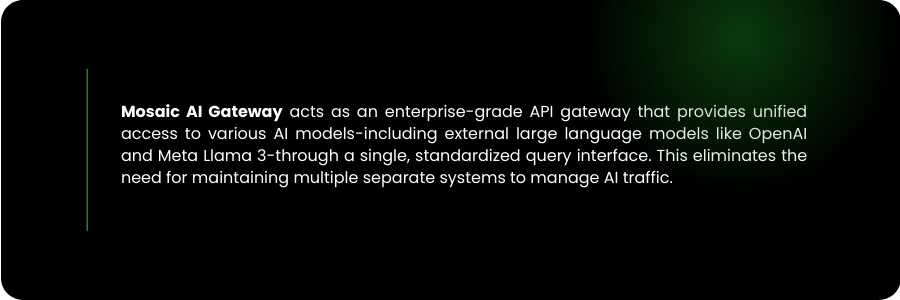

Mosaic AI Gateway

Mosaic AI Gateway serves as a central access point to various language models (LLMs) – both those hosted directly by Databricks (e.g., DBRX, Mistral) and external ones, such as OpenAI, Anthropic, Azure OpenAI, AWS Bedrock, or Google Vertex AI.

The Gateway provides a unified API compatible with OpenAI, which significantly simplifies switching between different models and efficiently managing traffic (e.g., load balancing).

Key functionalities of Mosaic AI:

- Advanced security mechanisms: Comprehensive systems for tracking usage, logging query content, and so-called guardrails – protective mechanisms at input and output, blocking, among other things, leakage of sensitive data (PII) outside the organization. All this information is recorded in Delta Tables, enabling subsequent auditing, analysis, and use for improving model quality.

- Precise permission management: Access to individual models and endpoints is managed by Unity Catalog, ensuring very accurate control over who can use particular resources and to what extent.

- Flexible billing model: Serverless models are billed per token, while own deployments – per GPU. The Gateway also allows for easy deployment of custom models and their fine-tuning.

- Inference Tables: Every interaction with the model can be recorded in specially designed tables, which are extremely useful for debugging, monitoring, and further training models on real-world data.

Databricks Vector Search

Vector Search is a serverless vector database, fully integrated with the Databricks ecosystem.

It is distinguished by:

- Automatic data synchronization: The system allows specifying a table (Delta Table) and columns to be transformed into embeddings. The mechanism itself takes care of updating indices when changes occur in the data source, eliminating the need to build dedicated pipelines.

- High performance and scalability: The database handles billions of embeddings and thousands of queries per second, ensuring low latency and automatically adjusting resources to the load.

- Support for Retrieval Augmented Generation (RAG): Vector Search is optimized for RAG techniques, enabling enrichment of queries directed to LLMs with context from corporate data resources, which significantly increases the accuracy and quality of generated responses.

- Integration with governance mechanisms: Full compatibility with Unity Catalog—access to embeddings and search results is controlled according to established security policies, and data flow paths are automatically monitored.

Vector Search offers three modes of embedding management: full automation (where Databricks performs all tasks), partial management (when we prepare embeddings ourselves), and full control via API (when the source is data outside Delta Tables).

AI Agents and workflows

Databricks enables quick creation and deployment of AI agents through integration with MLflow, a tool for managing the entire lifecycle of ML models and GenAI agents.

Key elements of this ecosystem:

- Mosaic AI agent framework: Enables tracking of agent code, performance metrics, and detailed traces (MLflow Tracing), which significantly facilitates debugging and optimization of agent operation.

- AI playground: An interactive environment for testing and comparing different agents and models, available without the need to deploy complex infrastructure. It allows for rapid prototyping, feedback collection, and real-time analysis of agent behavior.

- Agent evaluation: A set of tools for comprehensive evaluation of agent quality, including applications supporting human-in-the-loop feedback and customization of evaluation criteria to specific business needs.

- Unity Catalog functions: A mechanism for defining tools (functions) available to agents, with precise permissions and full integration with other platform elements.

MLflow Model Registry and Model Serving provide registration, deployment, and monitoring of agents and models, and all interactions are recorded in inference tables, guaranteeing full observability and the possibility of continuous improvement.

GenAI in SQL and BI tools

Databricks has also introduced the ability to call LLM models and agents directly from SQL queries (AI query). This allows for:

- Enriching existing data (e.g., automatic grammar correction in text columns) or building simple agents accessible directly from SQL.

- Integration of AI workflows with existing ETL pipelines and reporting systems, which opens new possibilities for BI teams and analysts.

Genie – Conversational BI and API Agent

Genie is a solution enabling interaction with data in natural language. It allows users to engage in dialogue with tables and dashboards, generate charts, and conduct analyses without knowledge of SQL.

The tool understands data structure, can independently join tables, and generate appropriate queries. It can also be used as an agent in multi-agent systems through a dedicated API.

Real Advantages and Limitations of Databricks in the Context of GenAI

After discussing key functionalities, it’s worth looking at an objective analysis of the platform’s strengths and limitations, with particular emphasis on practical aspects of implementations.

Databricks advantages

- Integrated tool ecosystem

Databricks offers an extensive set of GenAI tools that are mutually integrated and work together within a single platform. Particularly important is the aforementioned integration with Unity Catalog, enabling precise access management to data and functions at the level of the entire ecosystem. - Granular permission management

Unity Catalog Functions allow for detailed definition of tools and assignment of permissions to them. This makes it possible to design agents that have access only to selected functions and data, which significantly raises the level of security and flexibility of implementations. - Effective use of data from Delta Tables

Advanced operation mechanisms on Delta Tables and the ability to process both structured and unstructured data enable rapid building of AI solutions that previously presented considerable implementation difficulties. - Support for microservice architecture

The platform favors designing solutions based on AI microservices. Instead of one monolithic system, it’s possible to create an ecosystem of specialized agents, each responsible for a specific range of functionality and operating on dedicated data resources. - Automated deployments and efficient scaling

Databricks enables quick transfer of solutions to production environments and automatic infrastructure scaling. This significantly simplifies the process of prototyping and implementation, eliminating the need to build custom DevOps systems.

Databricks limitations

- Developer environment based on Notebooks

The platform promotes development in notebooks, which can be a significant constraint for teams accustomed to working in local IDE environments. Although dedicated plugins are available, they don’t provide full independence and offline work comfort that some developers are accustomed to. - Preference for specific frameworks

A significant part of the examples and recommended solutions is based on the Langchain framework. Despite declared support for alternative tools, Langchain is strongly promoted, which may create a barrier for teams preferring other technological solutions. - Limited availability of selected out-of-the-box functionalities

Not all advanced tools, such as OCR, are available as standard. Building such pipelines often requires independent implementation and integration within Databricks notebooks. - Billing model and cost aspects

Databricks is a more expensive solution compared to native cloud services—the additional overhead can range from 25% to even 100% relative to base cloud services. Billing is done in the Databricks Units (DBU) model, and dedicated cost calculators are available for individual tools, facilitating expense estimation. - Stream processing with noticeable delays

Streaming in the Databricks environment doesn’t function in strictly real-time mode—delays can range from several to a dozen minutes, depending on the complexity of the transformations being performed.

Industry-specific implementation scenarios

Databricks’ AI capabilities are being deployed across industries in distinctive ways that address sector-specific challenges:

Finance

Financial institutions are leveraging Databricks’ unified platform to improve risk assessment, detect fraud, and enhance customer experiences. JP Morgan Chase uses Databricks to process over 1 billion transactions daily, applying AI models to detect potentially fraudulent activities in near real-time. The medallion architecture proves particularly valuable for maintaining regulatory compliance while enabling innovation.

Retail

H&M utilizes Databricks to analyze customer data from both online and in-store interactions. Real-time processing enables personalized recommendations, optimized inventory management, and trend prediction, leading to increased customer loyalty and reduced inventory costs

Supply Chain and Logistics

Chevron Phillips Chemical Company partnered with Databricks and Seeq to scale industrial IoT analytics and machine learning for time-series data, improving operational insights and efficiency.

Healthcare and Life Sciences

In healthcare, organizations are implementing Databricks to accelerate research, improve patient outcomes, and optimize operations. Mayo Clinic’s implementation integrates clinical, genomic, and imaging data to power AI models that predict disease progression and treatment effectiveness. The platform’s ability to handle both structured and unstructured data (including medical images and clinical notes) provides a comprehensive view of patient health.

Summary: Gen AI on Databricks

Databricks constitutes a comprehensive platform for designing and implementing GenAI solutions, offering a rich set of mutually integrated tools, precise access management, and support for modern microservice architecture. Among the significant limitations are the notebook-based development environment, preference for specific frameworks, higher costs, and insufficient availability of some advanced functions in the basic package.

The platform will work particularly well in scenarios requiring rapid integration of AI solutions with existing data resources and flexible permission management.

However, for more complex, non-standard implementations, it may require additional work and adaptations to the specific requirements of the organization.

Frequently Asked Questions: Databricks, GenAI, and Data Management

What is the role of AI-generated comments in Databricks?

AI-generated comments in Databricks, typically provided in Unity Catalog, use large language models (LLMs) to automatically generate documentation and metadata for data assets such as tables, columns, and functions. These comments enhance data discoverability, help teams quickly understand data context, and support compliance and governance initiatives. They are particularly crucial for organizations aiming to scale AI responsibly while maintaining clear data definitions and comprehensive audit trails.

How can I use AI agents to load data into Databricks?

With tools like the Mosaic AI Agent Framework, you can quickly build and deploy AI agents that automate data ingestion and transformation processes. These agents can:

- Read from external data sources

- Process and clean data automatically

- Load processed data into Delta Tables

- Integrate seamlessly with the medallion architecture (Bronze, Silver, Gold layers) for scalable ETL operations

The MLflow integration allows you to track, debug, and optimize agents handling your data pipelines. This approach significantly accelerates the onboarding of new data sources and automates repetitive ETL tasks.

Is Databricks public?

As of August 2025, Databricks is not a public company. The platform remains privately owned, though it has announced IPO ambitions for the near future and continues to attract significant institutional investment.

Who owns Databricks?

Databricks was founded by researchers from UC Berkeley and is jointly owned by its founders, employees, and a range of investors including major technology firms and venture capital groups. CEO Ali Ghodsi and other founders retain substantial influence, with major stakes held by Microsoft, AWS, and other strategic partners.

What is a schema in Databricks?

A schema is a logical container within Unity Catalog that groups related data assets including tables, views, AI models, and functions. Schemas serve multiple purposes:

- Organize data assets logically

- Manage permissions and access control

- Clarify data lineage and relationships

- Make large-scale data operations more manageable and secure

What is Unity Catalog in Databricks?

Unity Catalog is Databricks’ comprehensive data governance system that provides:

- Fine-grained access control across all data assets

- Audit capabilities for compliance and security

- Lineage tracking to understand data flow and dependencies

- Function management across multiple cloud environments

Unity Catalog is tightly integrated with all GenAI components, including Vector Search and model endpoints, ensuring that data and resources are discoverable, secure, and used according to organizational policies. Its governance features are critical for meeting enterprise requirements in regulated industries.

What is a cluster in Databricks?

A cluster is a collection of cloud compute resources managed together to run processing and analytics workloads. Clusters handle everything from data ingestion to ETL processes, ad hoc queries, and the training or serving of AI models. Key features include:

- Automatic or on-demand provisioning

- Elastic scaling based on workload requirements

- Multi-language support (Python, SQL, Scala, R) in Databricks’ notebook environment

- Optimized performance for both batch and streaming workloads

Is Databricks open source?

While Databricks itself is a commercial SaaS platform, it is built upon popular open source projects created by its founders, including Apache Spark, Delta Lake, and MLflow. Additionally, Unity Catalog has been open sourced, fostering transparency and interoperability in data governance and AI model management.

What is the difference between Databricks and Azure AI?

Databricks is a unified analytics and AI platform specifically designed for building, deploying, and governing data-centric and generative AI applications at scale. Its key strengths include:

- Tight integration with data lakes via Delta Lake

- Advanced governance through Unity Catalog

- Native support for building AI agents and LLM applications

- Unified data and AI pipeline management

Azure AI, by contrast, is Microsoft’s suite of machine learning and cognitive services that provides a broader range of APIs including vision, speech, and general AI capabilities.

While Databricks can run on Azure and complement Azure AI services, Databricks uniquely focuses on unifying data and AI pipelines under a single governance and collaboration framework. This makes it particularly well-suited for organizations that need to manage complex data workflows alongside their AI initiatives.

Category: