May 09, 2025

Model Context Protocol (MCP): Solution to AI Integration Bottlenecks

Author:

CSO & Co-Founder

Reading time:

19 minutes

In the race to make Artificial Intelligence truly useful, not just conversational, one question is quietly surfacing across engineering teams and product roadmaps: how can AI systems actually do things in the real world?

It’s one thing for a Large Language Model to generate polished emails, clean code, or research summaries; it’s another for it to trigger an API call, analyze a live data feed, or automate a multistep workflow across tools and platforms. This leap, from “language generator” to capable AI agent, is the frontier that AI companies are now sprinting toward, and like most technological turning points, the success of this transition hinges not just on AI, but on infrastructure that is beneath it.

And here the Model Context Protocol (MCP) enters; this quietly emerging standard is likely to become the backbone of how AI agents interface with the world around them, as it addresses a common bottleneck: how do you connect a powerful LLM to the tools, data, and APIs it needs, without building a fragile, one-off integration every time?

Today, even the most advanced AI products often rely on bespoke glue code, internal APIs, and tightly coupled systems. The result? Innovation slows, interoperability suffers, and scalability becomes an afterthought.

MCP offers a different path: a unified protocol for connecting LLMs to the external world through standardized interfaces called tools, resources, and prompts. With this structure, AI agents can securely access external capabilities without engineers reinventing the wheel for each new feature or platform.

The idea is gaining ground fast. From indie developers building custom GPT-4 agents to startups creating collaborative AI coding tools, early adopters are beginning to rally around MCP as a way to scale smarter and faster. At a time when companies are investing heavily in AI enablement, the timing couldn’t be better for a shared standard that prioritizes reuse, security, and speed.

As AI moves from lab demos to enterprise deployment, protocols like MCP could become the connective tissue that makes or breaks that transition. Those who understand and implement it early stand to gain a serious competitive edge.

In this article, we’ll break down the core concepts behind MCP and explore the challenges it’s designed to solve.

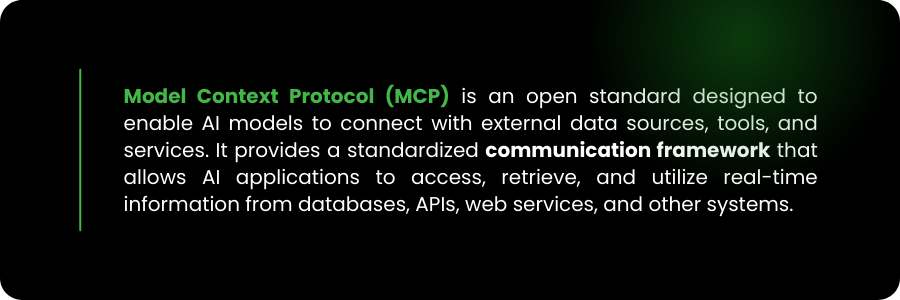

What is Model Context Protocol (MCP)?

Anthropic introduced the Model Context Protocol (MCP) in late 2024 to solve a critical challenge in AI deployment: connecting powerful language models to the diverse systems where real-world data resides.

Released as an open standard, MCP enables developers to build secure, two-way connections between data sources and AI-powered tools.

Key features of MCP:

- Standardized interface for data access

- Secure communication between models and external systems

- Real-time data retrieval and integration

- Simplifies AI deployment in complex environments

What problem does the MCP solve?

As AI assistants became more advanced, they remained largely isolated from the actual data and tools organizations use daily. Each time a developer wanted to connect an AI model to a new database, API, or content repository, they had to write custom integration code.

This created a fragmented landscape of one-off connectors, making it hard to scale AI across many contexts and leading to information silos. Even the best models were “trapped” behind these silos, unable to access up-to-date or relevant information, which limited their usefulness and made integration efforts repetitive and costly.

This challenge is often described as the “M×N problem”: with M models and N tools, each required a separate, bespoke connector, multiplying the integration effort and complexity.

Even the most sophisticated AI models were limited by this isolation – trapped behind information silos and legacy systems that prevented them from accessing relevant, up-to-date information. This made enterprise AI integration repetitive, costly, and difficult to scale.

Business implications of M+N problem are particularly severe for organizations looking to scale AI across departments, tools, and workflows.

Here’s a breakdown of the key impacts:

1. High integration costs and developer overhead

When every AI model requires a custom-built connector to each tool, the integration effort scales exponentially, each AI connector demands engineering resources, maintenance, testing, and security reviews. It makes AI adoption expensive and slow to implement.

Information silos and limited model utility

Without seamless access to live systems and up-to-date data, even the most advanced AI models remain disconnected from the organization’s actual operations. This results in stale outputs, irrelevant insights, and ineffective automation.

Then, AI initiatives fail to deliver value, as models cannot make decisions or recommendations based on real-time, contextual business data.

Fragmentation and lack of standardization

A patchwork of one-off connectors creates a brittle, inconsistent architecture where integrations are non-reusable, hard to audit, and fragile to change.

In that case, organizations face long-term scaling issues, security risks, and inconsistent behavior across AI-enabled applications. This also slows down the rollout of AI across teams or business units.

Inhibited ROI on AI Investments

When models are locked behind integration bottlenecks and data silos, organizations are unable to fully capitalize on their AI infrastructure, tools, or talent. AI projects underdeliver on promised ROI, undermining confidence among stakeholders and delaying broader AI adoption.

MCP as the universal standard

Anthropic developed MCP as an open, universal standard that serves as “a USB-C port for AI applications,” providing a standardized way to connect AI models to different data sources and tools.

By creating this protocol, Anthropic aimed to replace fragmented, ad-hoc integrations with a unified interface that could dramatically simplify the integration landscape.

The architecture transforms the complicated M×N problem into a much simpler M+N setup: each model and each tool only needs to implement MCP once to become interoperable with the entire ecosystem. This approach mirrors how standards like ODBC once unified database connectivity.

MCP gains industry traction

Anthropic has released pre-built MCP servers for widely used enterprise systems including Google Drive, Slack, GitHub, Git, Postgres, and Puppeteer. These servers allow AI systems to interact with enterprise tools through a unified protocol, simplifying integration and tool invocation.

In a major endorsement of MCP’s enterprise potential, Microsoft partnered with Anthropic to develop an official C# SDK, accelerating adoption within corporate environments.

Simultaneously, developer tool companies such as Zed, Replit, Codeium, and Sourcegraph began integrating MCP to enrich their platforms with AI capabilities.

Open-sourcing MCP: A community-driven ecosystem

By open-sourcing the Model Context Protocol, Anthropic fostered a collaborative ecosystem where developers and organizations can build and share MCP-compatible connectors. This eliminates the need for maintaining proprietary connectors for each data source, enabling:

- Greater interoperability

- A more sustainable AI integration architecture

- Faster innovation and adoption

Through a consistent protocol, AI systems can maintain contextual awareness as they interact with diverse tools and datasets, significantly reducing complexity while enhancing performance and relevance.

OpenAI joins: A turning point

In March 2025, OpenAI officially adopted MCP across its product suite. CEO Sam Altman announced:

“People love MCP and we are excited to add support across our products.”

OpenAI’s support began with the Agents SDK, with ChatGPT desktop app and Responses API integration to follow.

This move was particularly noteworthy because OpenAI and Anthropic are direct competitors. As technology analyst Shelly Palmer commented:

“So why would OpenAI adopt its rival’s protocol? Because standardization will speed AI adoption.”

Momentum from Microsoft and Google

Even before OpenAI’s announcement, Microsoft had already embedded MCP into Azure AI services and released the C# SDK in partnership with Anthropic, further validating the protocol’s enterprise readiness.

Shortly after, Google DeepMind CEO Demis Hassabis confirmed MCP support within the Gemini SDK, calling it a:

“Rapidly emerging open standard for agentic AI.”

MCP’s Rise

MCP gained substantial momentum in early 2025. It has since outpaced frameworks like LangChain, and is now positioned to overtake OpenAPI and CrewAI as the leading protocol for tool-using AI systems.

Model Context Protocol (MCP) structure

Understanding the structure of the Model Context Protocol (MCP) isn’t just technically useful—it also reveals how businesses can build scalable AI solutions, reduce integration overhead, and accelerate time-to-value across applications.

Here’s a clear breakdown of MCP’s components:

- MCP Host

The MCP Host is the user-facing application where you interact with AI models, serving as the central component in the Model Context Protocol architecture. It manages multiple MCP Clients, each maintaining a one-to-one connection with a specific MCP server.

Examples: Claude Desktop app, IDEs like Cursor or Windsurf, chatbots, or custom AI tools.

By acting as the control layer for AI interactions, the Host enables businesses to embed intelligent capabilities into existing applications without disrupting user workflows. This accelerates adoption, promotes consistency across AI experiences, and future-proofs investments by allowing new tools to be added without redesigning the interface.

- MCP Client

MCP Client serves as a specialized bridge within the Host application, maintaining a one-to-one connection with a specific MCP Server. It handles all communication between them, discovers available capabilities, negotiates protocol compatibility, and manages resource subscriptions.

A Host can incorporate multiple Clients simultaneously, each connected to different Servers, enabling access to diverse external systems through a standardized interface.

For businesses, the Client abstracts away the complexity of connecting to diverse systems. This makes it faster and cheaper to integrate multiple third-party or internal services, enabling more powerful, multi-functional AI solutions. It also allows for modular scaling – new capabilities can be added via new Clients without rewriting core logic.

- MCP Server

The MCP Server is a program that exposes external systems to AI models through a standardized interface, running either locally on a user’s device or remotely in the cloud.

These servers act as wrappers around real-world systems like Google Drive, Slack, GitHub, and databases, providing three distinct capabilities:

- Tools (invokable functions)

- Resources (readable data sources)

- Prompts (predefined interaction templates)

By turning existing systems into AI-accessible endpoints, the Server enables businesses to reuse their current infrastructure rather than replace it. This allows teams to unlock new value from legacy tools, increase operational efficiency, and ensure data and functionality are available to AI assistants in a controlled, standardized manner.

How MCP works

MCP is like a universal “adapter” or “translator” that helps your AI assistant talk to any app or data source using the same simple language.

- The AI assistant (Host) wants to get some info or do something for you.

- It talks to an MCP Client inside the app, which knows how to connect to MCP Servers.

- The MCP Client connects to an MCP Server – a program that knows how to get data or do tasks from a specific place (like your files, a calendar, or a website).

- The MCP Server tells the client what it can do, like “I can read files” or “I can check your calendar.”

- When the AI assistant needs something, it asks the MCP Client to request it from the MCP Server.

- The MCP Server gets the info or performs the task, then sends the answer back.

- The AI assistant uses this fresh info to give you a better, more accurate response.

What does MCP mean for AI applications?

The widespread adoption of MCP across major AI platforms has several key implications:

- Enhanced AI capabilities:

MCP enables AI models to access and interact with external data sources, business tools, and software applications, dramatically improving the relevance, accuracy, and usefulness of AI-driven responses by allowing real-time access to up-to-date information. - Reduced vendor lock-in

Organizations can build AI systems without fear of being trapped with a single provider. If one model doesn’t suit their needs, they can swap in another MCP-compatible model without rebuilding their architecture. - Simplified development

Developers now only need to learn one protocol to connect to a wide range of systems, dramatically reducing the complexity of AI integration. - Lower barriers to entry

Startups and smaller companies can build specialized services that plug into any MCP-compatible system without asking users to adopt another proprietary platform.

Considerations, risks, and challenges of adopting MCP

While the Model Context Protocol (MCP) offers a compelling solution to the M×N problem and the fragmentation of AI integrations, it’s not without its own set of challenges. For organizations evaluating MCP adoption, it’s important to weigh the trade-offs, risks, and limitations involved to ensure successful implementation and long-term sustainability.

1. Ecosystem maturity and tooling gaps

Although MCP has gained traction and early industry support, it’s still an emerging standard. This means:

- Documentation, SDKs, and developer tooling may be limited or inconsistent across languages.

- Community-contributed connectors may vary in quality, security, and support.

- Enterprises may need to invest time in building or maintaining MCP Servers for less common tools.

Consideration: Early adopters may face a steeper learning curve and must be prepared to contribute to the ecosystem or build custom infrastructure to fill gaps.

2. Security and access control complexity

Giving AI models structured access to tools, APIs, and data sources through MCP raises legitimate security and compliance concerns, especially in regulated industries.

- Fine-grained access controls must be enforced at both the MCP Server and Host level.

- Credential management, sandboxing, and audit logging become critical for preventing misuse or data leakage.

- Role-based permissions must be designed to avoid overexposing sensitive tools or resources to the model.

Risk: Improper implementation can lead to over-permissioned AI agents, accidental data exposure, or compliance violations.

3. Operational Overhead and Resource Management

MCP introduces persistent communication between Hosts, Clients, and Servers—especially in long-lived AI sessions or agentic workflows.

- Hosting and managing multiple MCP Servers (especially in production) introduces operational complexity.

- Resource subscriptions, tool availability, and concurrency need to be managed carefully to prevent service degradation.

- Observability and debugging across this distributed setup can be non-trivial.

Challenge: Teams need robust monitoring, logging, and error handling frameworks in place to maintain system reliability.

4. Standardization trade-offs

While MCP aims to standardize AI-tool communication, it may not support highly specialized or domain-specific integrations out of the box. Organizations may face limitations when:

- Tools require custom interaction patterns not yet modeled in MCP.

- Real-time, stateful tools (e.g., multiplayer editors or live dashboards) exceed the protocol’s current capabilities.

- Some use cases demand tighter coupling than MCP’s abstraction permits.

Limitation: MCP is a general-purpose protocol, which may not be ideal for niche use cases requiring deeply customized or low-latency integrations.

5. Dependency on ecosystem stability

With industry players like Anthropic, Microsoft, and OpenAI supporting MCP, momentum is growing. However, widespread success depends on continued ecosystem alignment and community investment.

- A fragmentation in standards could result in competing protocols, splintered compatibility, or vendor lock-in.

- A slowdown in development or abandonment by major contributors would affect long-term viability.

Risk: Businesses investing heavily in MCP must monitor the standard’s evolution and be ready to adapt if the ecosystem shifts.

Practical examples of MCP utilization

1. PayPal: Natural language interfaces for commerce

PayPal’s MCP Server connects large language models (LLMs) directly to core commerce APIs. This allows AI agents to:

- Manage inventory

- Process payments

- Coordinate shipping

- Handle refunds

– all through natural language commands.

This isn’t a prototype – it’s a live system that eliminates manual workflows, improves response times, and empowers AI to support day-to-day operations in real-time.

2. Sentry: AI-Enhanced Developer Workflows

In software development, Sentry uses MCP to surface live error tracking data to AI assistants like Claude and GPT-4. Through this setup, developers can:

- Detect and triage bugs

- Automate environment setup

- Query performance metrics

– without leaving their IDEs.

This reduces context switching, speeds up debugging, and makes development environments smarter and more efficient.

3. Vectorize: Contextual search for enterprise knowledge

Vectorize enables organizations to expose internal data, documents, wikis, PDFs, via vector search APIs powered by MCP. This allows AI models to:

- Access up-to-date internal knowledge

- Understand proprietary terminology

- Deliver responses with context, not just relevance

It replaces outdated FAQ bots and disconnected knowledge bases with intelligent, contextual search that grows with the organization.

4. Block & Apollo: Scalable AI integrations

Fast-growing companies like Block and Apollo are using MCP to connect AI with systems such as:

- Analytics dashboards

- Payment processors

- Communication tools

By standardizing integration through MCP, they avoid the overhead of building dozens of fragile, custom connectors. The result? Scalable, plug-and-play AI that adapts as their operations evolve.

Decision framework: Is MCP right for your organization?

Adopting the Model Context Protocol (MCP) represents more than a technical upgrade – it’s a foundational decision about how your organization will scale AI capabilities across systems, teams, and workflows. To help guide this decision, we recommend a phased approach, combined with key strategic questions and a high-level decision tree to assess fit.

A phased approach to MCP adoption

Instead of attempting a full-scale rollout, organizations can take a staged approach that balances experimentation with long-term planning:

Phase 1: Assess integration pain points

- Audit current AI projects and the systems they rely on (APIs, databases, internal tools).

- Identify repetitive, brittle, or bespoke connectors slowing down development.

Phase 2: Pilot with low-risk, high-value systems

- Select a small number of systems (e.g. GitHub, Postgres, Slack) and expose them via MCP Servers.

- Use existing MCP Hosts (like IDEs or chat UIs) to experiment with functionality.

Phase 3: Standardize internal AI integration strategy

- Develop internal best practices and governance for using MCP.

- Evaluate open-source vs. proprietary MCP components.

- Build reusable connectors or contribute back to the ecosystem.

Phase 4: Scale and operationalize

- Roll out MCP to additional teams or departments.

- Implement observability, role-based access, and audit logging for production use.

- Align with IT and security to ensure compliance and uptime.

Questions executives should ask their technical teams

To properly evaluate whether MCP aligns with organizational goals, executives should ask:

- Where are we spending the most time building one-off AI integrations?

- How often do we repeat similar connector logic across teams or tools?

- Are our AI assistants currently limited by lack of access to real-time tools or data?

- Do we have multiple AI models or platforms that need to interact with the same systems?

- What’s our current strategy for managing AI access to internal systems securely?

- Could a shared, protocol-based approach reduce our integration and maintenance burden?

The MCP momentum: Why now and what comes next?

Just over a year ago, the concept of AI agents accessing real-world tools, data, and APIs without bespoke integration seemed futuristic at best. Today, it’s not only feasible – it’s operational. MCP, an emerging open standard, is reshaping how organizations deploy large language models in production environments. And it’s enabling a new breed of AI-native applications that are faster to build, cheaper to scale, and smarter in execution.

The rise of MCP represents more than a technical convenience – it’s a strategic opportunity. At a time when AI teams face mounting pressure to deliver results, MCP’s standardization slashes integration costs, accelerates time-to-market, and supports vendor-neutral development. Organizations no longer need to bet everything on a single model or provider. With MCP, they can swap out models, rewire tools, and expand capabilities without starting from scratch.

The protocol also brings long-awaited discipline to personalization and context handling. Instead of hardcoding behavior into agents, teams can dynamically route them to the right tools, prompts, or documents, keeping interactions relevant and efficient.

The future of MCP

As more organizations experiment with MCP, its ecosystem is exploding. GitHub now hosts dozens of open-source MCP servers. Cloudflare recently demoed 13 new MCP-powered tools, including ones for finance, customer service, and logistics. Anthropic, OpenAI, and Hugging Face are all building or adopting MCP-compatible features.

Expect to see MCP become the backbone of AI platforms in the same way TCP/IP underpins the web. In the near future, LLMs won’t just talk – they’ll act, using tools with precision, chaining functions across systems, and handling tasks with autonomy.

The organizations laying the groundwork now, by building with or adopting MC, aren’t just optimizing. They’re preparing for a world where AI isn’t an assistant, but a partner in productivity.

FAQ: Model Context Protocol (MCP): Solution to AI Integration Bottlenecks

What is MCP vs API?

MCP is an AI-specific protocol designed for language models to interact with external systems, while APIs are general-purpose interfaces for software communication.

Key differences:

- Purpose: APIs are broad; MCP is AI-focused for providing context to language models

- Standardization: MCP provides unified AI-tool integration; APIs vary widely

- Features: MCP includes AI-native capabilities like resource subscriptions and tool discovery

Can ChatGPT use MCP?

As of August 2025, OpenAI has explored MCP integration through pilot programs and developer previews but has not officially fully integrated MCP into the ChatGPT desktop app or Responses API in production. OpenAI’s Agents SDK is positioned to support MCP-compatible tool integrations, signaling progress towards broader adoption, but official release timelines for full MCP support remain pending.

What is MCP certification?

There are emerging educational resources and training programs focused on MCP implementation, but a fully standardized or universally recognized MCP certification does not yet exist. Notable initiatives include:

-

Hugging Face offering free educational materials and workshops related to MCP concepts.

-

Coursera and other platforms are beginning to offer introductory courses on AI tool integration protocols that cover MCP-related topics but not formal certification.

-

Anthropic provides workshops and developer resources on their MCP architecture and tools but does not currently offer an official certification program.

How to build an MCP server?

To build an MCP server, follow these best practices:

-

Choose your architecture approach: local, remote, or hybrid depending on your system needs.

-

Implement essential components: tools (functions), resources (data streams), and prompts (templates) in compliance with MCP specifications.

-

Adhere strictly to protocol standards including negotiation and secure communications to ensure interoperability.

-

Use available SDKs such as Microsoft’s C# SDK or community-built SDKs, supplemented by Anthropic’s MCP references.

-

Thoroughly test the integration by connecting your MCP server with MCP Hosts like Claude Desktop or compatible IDEs.

How much does Claude MCP super agent cost?

No separate “super agent” product exists. MCP functionality is integrated into existing Claude services:

- Claude Desktop: Standard subscription pricing applies

- Claude API: Existing API pricing based on token usage

- MCP Protocol: Free and open-source

Who offers the best integration services with MCP?

Official MCP support primarily comes from:

-

Anthropic, the protocol’s creator and maintainer.

-

Microsoft, a key partner providing a C# SDK for MCP development.

Other notable organizations involved in MCP-based integration projects include:

-

Enterprise users like PayPal (commerce APIs), Block, Apollo (analytics, payments), and Sentry (developer tools).

-

Developer platforms such as Zed, Replit, Codeium, Sourcegraph that experiment with or support MCP in their environments.

-

Specialized services like Vectorize (enterprise knowledge management) and Cloudflare, which offers demos of MCP tools.

The MCP ecosystem also benefits from a vibrant open-source community on GitHub, providing servers, connectors, and SDKs that enhance integration possibilities.

Category: