August 30, 2025

What is Data Warehousing?

Author:

CEO & Co-Founder

Reading time:

7 minutes

Data warehousing is the backbone of modern business intelligence, enabling organizations to collect, store, and analyze vast amounts of information. It transforms raw data from multiple sources into actionable insights, serving as a single source of truth for decision-making.

Unlike operational databases, which handle daily transactions, data warehouses are optimized for analytics and reporting.

They consolidate historical data from across business systems into one centralized, consistent repository – making it possible to analyze trends, measure performance, and gain competitive advantages.

Data Warehousing in the Big Data Era

Big Data refers to datasets that are so large, complex, or rapidly changing that traditional data processing tools cannot handle them effectively.

Big Data is typically characterized by the “5 V’s”:

- Volume: Massive amounts of data (terabytes to petabytes)

- Velocity: High-speed data generation and processing requirements

- Variety: Diverse data types including structured, semi-structured, and unstructured data

- Veracity: Data quality and trustworthiness challenges

- Value: The potential business insights hidden within the data

Warehouses vs. Big Data Platforms

| Feature | Data Warehouses | Big Data Platforms | Data Lakehouses |

|---|---|---|---|

| Primary Use Case | Business Intelligence & Reporting | Large-scale Data Processing & ML | Unified Analytics & AI |

| Data Types | Structured data | Unstructured & semi-structured | All data types |

| Query Language | Complex SQL | Various (Scala, Python, SQL) | SQL + Programming languages |

| Performance | Optimized for known workloads | Variable, depends on cluster size | High performance for both BI and ML |

| Data Governance | Strong governance & compliance | Limited built-in governance | Built-in governance with flexibility |

| Storage Format | Relational tables | Files (Parquet, JSON, etc.) | Open formats (Delta, Iceberg) |

| Scalability | Vertical & horizontal scaling | Massive horizontal scaling | Elastic scaling |

| Cost Model | Pay for compute + storage | Pay for cluster time | Pay for usage |

| Ease of Use | User-friendly for analysts | Requires technical expertise | Balanced – accessible to both |

| Real-time Processing | Limited | Excellent (Spark Streaming) | Good (streaming + batch) |

| Machine Learning | Basic analytics | Advanced ML capabilities | Native ML integration |

| Examples | Snowflake, Redshift, BigQuery | Hadoop, Spark, Databricks | Databricks Lakehouse, Snowflake |

| Best For | Traditional BI, reporting, dashboards | Data science, ETL at scale, exploration | Modern analytics, AI/ML, unified platform |

How Data Warehouses Handle Big Data Challenges

Traditional data warehouses were designed primarily for structured data from internal business systems.

However, the Big Data revolution has fundamentally transformed data warehousing in several key ways:

- Volume scaling: Modern cloud data warehouses like Snowflake, BigQuery, and Redshift can automatically scale to handle petabyte-scale datasets. They separate storage and compute resources, allowing organizations to store vast amounts of data cost-effectively while scaling processing power as needed.

- Velocity management: Real-time and near-real-time data processing capabilities have been integrated into modern data warehouses. Technologies like streaming ETL and real-time data pipelines enable warehouses to process high-velocity data streams from IoT devices, social media, and transaction systems.

- Variety support: Contemporary data warehouses now support semi-structured data formats like JSON, XML, and Parquet files alongside traditional structured data. This enables organizations to warehouse data from web applications, social media, and other sources that don’t fit traditional relational models.

The evolution of data warehousing capabilities has blurred the traditional boundaries in the Big Data vs Data Warehouse debate. While Big Data platforms like Hadoop were once necessary for handling massive, unstructured datasets, modern data warehouses now incorporate many Big Data processing capabilities directly.

This convergence means organizations no longer need to choose between structured analytics and Big Data processing, instead, they can leverage unified platforms that combine the governance and performance of traditional data warehouses with the flexibility and scale of Big Data technologies.

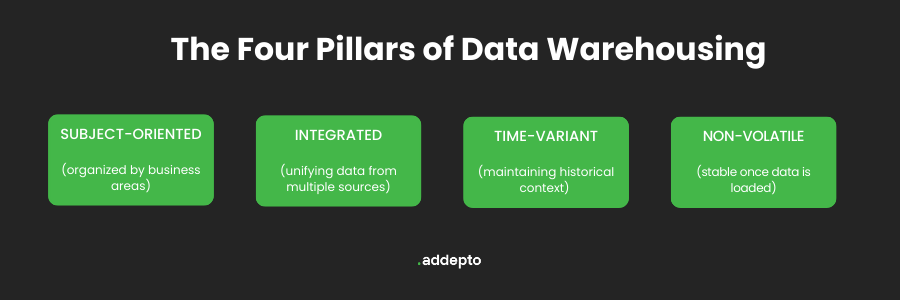

The Four Pillars of Data Warehousing

The Four Pillars of Data Warehouse design, as established by William Inmon, form the foundational definition for modern data warehouses. These pillars ensure that data warehouses effectively support analytical processing and business decision-making by enforcing clear organizational, technical, and functional standards.

- Subject-oriented

A data warehouse is subject-oriented, meaning it organizes data around key business areas (such as sales, finance, or human resources) rather than individual transactions or processes. This allows organizations to analyze data by specific themes and perform in-depth analysis within focused domains.

- Integrated

The integration pillar ensures that the data warehouse brings together data from multiple sources (such as databases, flat files, and external APIs), cleaning and standardizing it into a unified system. This process resolves inconsistencies in naming, formats, and measurement units, providing a consistent and reliable view across the organization.

- Time-variant

A data warehouse is time-variant, which means it stores historical data and associates changes with specific time periods (e.g., by days, weeks, months, or years). This enables organizations to analyze trends, compare current and past data, and generate long-term business insights.

- Non-volatile

Once data enters the warehouse, it remains stable and unchanged over time—data is typically not deleted or updated, only appended. This immutability guarantees a consistent record for analysis, ensuring accuracy when reviewing historical data or generating audit trails.

Data Warehousing and ETL

ETL (Extract, Transform, Load) and data warehousing are deeply interconnected—the ETL process is the essential pipeline that feeds a data warehouse with clean, consistent, and structured data for analytics and reporting.

- ETL is the Data ingestion engine: The ETL process extracts raw data from various source systems (like databases, files, APIs), transforms it for quality and consistency (cleaning, filtering, formatting), and loads it into the data warehouse. Without ETL, data warehouses would lack the reliable input mechanisms needed for effective analytics.

- Data Warehouse is the analytical repository: The data warehouse serves as the central, structured storage location where data is organized, indexed, and made ready for business intelligence queries and reporting. It provides the stable foundation that makes complex analytics possible.

A data warehouse relies on robust ETL pipelines for accurate, up-to-date, and ready-to-analyze data; without ETL development, the warehouse would not have reliable input data or could become inconsistent. Conversely, the structure and requirements of the data warehouse guide how ETL processes are designed.

In summary, ETL acts as the trusted bridge – preparing and delivering data into the data warehouse, ensuring that the data used for business analysis is trustworthy, timely, and actionable. The effectiveness of the whole data warehousing solution depends greatly on the quality and reliability of the ETL process.

Data Warehouse Implementation

Data Warehouse implementation requires careful planning and a phased approach to ensure successful deployment and user adoption.

Requirements Assessment: Identify key business questions, define success metrics, and determine high-value data sources. Engage stakeholders to understand reporting needs and analytical requirements.

Phased Approach:

- Phase 1: Establish core infrastructure and basic ETL for one business area

- Phase 2: Expand data sources and implement data quality controls

- Phase 3: Deploy reporting tools and train users

- Phase 4: Optimize performance and expand to additional areas

Common Challenges

- Data quality: Inconsistent formats and missing values can undermine effectiveness. Implement validation rules and cleansing procedures early.

- Integration complexity: Start with well-structured, high-value data sources before tackling complex system integrations.

- User adoption: Provide comprehensive training and demonstrate clear business value to encourage engagement.

- Performance issues: Implement proper indexing and partitioning strategies to maintain fast query response times.

Conclusion: Data Warehousing in Business

Modern data warehouses remain essential for businesses leveraging data as a strategic asset, delivering scalable foundations for decision-making in the digital era.

However, the complexity of Data Warehouse implementation makes partnering with experienced professionals crucial for success. These projects involve numerous technical decisions from architecture design to ETL optimization, and organizations that attempt implementation alone often encounter costly delays and performance issues.

Category: