December 13, 2024

What is Entropy in Machine Learning?

Author:

CSO & Co-Founder

Reading time:

2 minutes

Entropy in machine learning represents the level of randomness in data, directly impacting the accuracy of predictions and insights.

Key Takeaways

- Entropy in machine learning measures the randomness or disorder in information.

- High entropy indicates less predictable and less actionable data, while low entropy means more structured and usable data.

- Entropy is critical in decision trees, helping determine the most efficient data splits for accurate predictions.

![]()

Interested in machine learning? Read our article: Machine Learning. What it is and why it is essential to business?

![]()

Understanding Entropy in Machine Learning

In physics, entropy measures the randomness in a closed system. Similarly, in machine learning, entropy gauges the disorder in processed information. Lower entropy signifies that data is easier to interpret and yields more valuable insights, while higher entropy suggests unpredictability and complexity.

Illustrating Entropy with a Coin Toss

Consider flipping a coin. Each toss has two possible outcomes (heads or tails), making it inherently unpredictable—a scenario of high entropy. However, rare outcomes, like flipping tails ten times consecutively, carry more information because they are surprising events.

![]()

It might be interesting for you – Machine Learning models

![]()

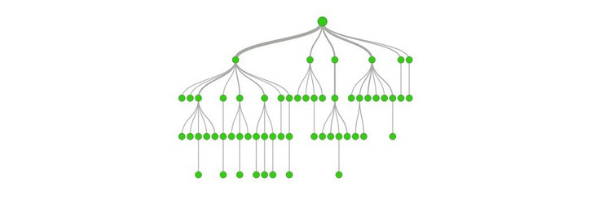

Entropy in Decision Trees

Decision trees, widely used for classification and regression tasks, rely heavily on entropy. These models consist of nodes (data splits) and leaves (outcomes). By calculating entropy, decision trees identify the optimal variables for splitting data, ensuring accurate and efficient predictions. Lower entropy enables more reliable decision-making based on historical data.

Source: opendatascience.com

Source: opendatascience.com

![]()

Read more about Decision Tree Machine Learning Model

![]()

Why Entropy Matters

Understanding and minimizing entropy in machine learning enhances prediction accuracy and decision-making. It also helps prioritize variables, improving the performance of models like decision trees.

Conclusion

Entropy is a foundational concept in machine learning, crucial for deriving actionable insights from data. Lowering entropy leads to more structured information, enabling accurate predictions and effective decision-making in ML projects.

Explore Our Machine Learning Services

Ready to integrate machine learning into your business? Addepto’s team offers MLOps Consulting and development services to help design, implement, and optimize ML solutions. Contact us to elevate your business with advanced analytics and automation.

References

[1] Interesting Engineering.com. An infinite disoder. The physics of entropy. URL: https://interestingengineering.com/an-infinite-disorder-the-physics-of-entropy. Accessed Jul 10, 2021.

Category: