Databricks Deployment Services

Scale AI and ML securely with Databricks – cutting costs, simplifying workflows, and driving business impact without risk.

Databricks Implementation Benefits

Databricks delivers cost savings and faster outcomes across analytics, AI, and reporting.

Databricks eliminates siloed tools for data engineering, machine learning, and analytics – reducing annual spend on infrastructure and licenses by $500K–$2M. More importantly, it accelerates outcomes: fraud detection shifts from batch to real-time, churn prediction models deploy 5x faster, and reporting becomes self-service.

For example, Regeneron cut drug discovery timelines by 18 months, while Shell reduced seismic analysis from 6 months to 2 weeks.

Databricks integrates seamlessly with 100+ existing systems, without data migration

Yes. Databricks connects to 100+ systems (Snowflake, SAP, Salesforce, Oracle, AWS S3, and more) without requiring data migration. Your teams can analyze data across warehouses and lakes in one unified environment.

No ETL rewrites are needed – existing jobs in Informatica or Talend continue running, while new workloads are seamlessly built in Databricks.

Databricks ensures enterprise-grade governance, security, and regulatory compliance

Unity Catalog gives full visibility and control: track every access to sensitive data, enforce column-level permissions, and automate GDPR/CCPA compliance (e.g., consent tracking, data deletion).

The platform is SOC 2 Type II certified, HIPAA compliant, and designed for regulated industries, ensuring enterprise-grade security by default.

Databricks protects you from vendor lock-in with open formats and multi-cloud support.

No. Your data remains in open Delta Lake format (Parquet-based), ensuring portability across tools. Workflows are built on Apache Spark (open source), and ML models export to standard formats like ONNX or MLflow.

Databricks runs on all major clouds (AWS, Azure, GCP), enabling true multi-cloud strategy and eliminating single-vendor risk.

Databricks drives 3–6x ROI through automation, productivity, and faster model deployment.

Databricks cuts costs not only by consolidating tools but also by eliminating manual processes and accelerating outcomes:

- Data engineering teams spend 60% less time on pipeline maintenance.

- Data scientists reduce model training cycles from weeks to hours with optimized clusters.

- Business analysts no longer rely on IT for reporting, saving thousands of hours annually.

These operational efficiencies typically translate to 3–6x ROI within the first 12–18 months.

Databricks future-proofs your data strategy with open standards and continuous innovation.

Databricks is built on open standards (Delta Lake, Apache Spark, MLflow), ensuring compatibility with emerging technologies. Its lakehouse architecture scales effortlessly as your data grows from terabytes to petabytes.

Continuous platform innovation (AI copilots, vector search for GenAI, serverless compute) means your organization is never locked into legacy bottlenecks. You can adopt new use cases – from real-time personalization to generative AI – without replatforming

Clients that trusted us

Addepto Databricks Integration Process

Strategic Assessment & Architecture Design

We begin by understanding your current data landscape and business objectives. Whether you’re starting from scratch or modernizing existing infrastructure, we map out the optimal Databricks architecture for your specific needs.

Result: A comprehensive roadmap that defines governance frameworks, security policies, and integration patterns – ensuring your Databricks deployment aligns with enterprise standards from day oneResult: A customized roadmap aligned to KPIs, reducing risks and setting the stage for ROI from day one.

Foundation Setup & Governance Implementation

We establish the core Databricks environment with enterprise-grade governance built in. This includes Unity Catalog configuration, workspace organization, access controls, and data quality frameworks that ensure compliance and reliability.

- Ground-up deployments: Complete infrastructure setup with best practices embedded

- Modernization projects: Governance layer implementation over existing data assets

- Quality assurance: Automated validation rules and monitoring systems

Outcome: A secure, governed foundation ready for immediate productive use.

Platform Integration & Migration

We connect Databricks seamlessly with your existing ecosystem while modernizing legacy processes. This phase focuses on data pipeline development, system integrations, and migration of critical workloads.

- Legacy ETL modernization to Delta Live Tables

- Real-time streaming implementations

- Integration with existing warehouses and cloud services

- CI/CD pipeline establishment

Impact: Unified data platform that maintains business continuity while unlocking new capabilities.

Business Intelligence Enablement

We transform Databricks into a self-service analytics platform that empowers all users—from data scientists to business analysts. This includes dashboard development, automated ML pipelines, and collaboration tools.

- Self-service analytics dashboards

- Automated reporting and alerting

- ML model deployment and monitoring

- Cross-team collaboration frameworks

Result: Democratized data access with maintained governance and security controls

Optimization & Knowledge Transfer

We don’t just launch and leave. Our team:

- Continuously fine-tunes performance and spend

- Monitors adoption to ensure ROI is realized

- Trains your teams so they own the platform’s evolution

Long-term payoff: A self-sufficient organization with a high-performance Databricks environment that scales with your ambitions.

Proven Databricks Implementation Examples

At Addepto, we design and deliver enterprise-scale Databricks solutions that process millions of data points daily and generate measurable ROI within the first quarter.

Our experience spans data integration, advanced ML pipelines, and cloud-native architecture, helping organizations reduce infrastructure costs, accelerate innovation, and unlock tangible business outcomes.

Databricks Case Studies

1. Optimizing Aircraft Turnaround Through Practical AI

A scalable platform built on Databricks to process streaming data from airport systems. It powers real-time predictions of aircraft stand availability, enabling smarter resource assignments via an intuitive dashboard. The solution emphasizes simplicity and domain expertise, improving turnaround accuracy and operational efficiency.

2. Real-Time Fraud Detection Platform for Renewable Energy Certificates

Addepto modernized the architecture using cloud-native tools and Databricks to power scalable, rule-based, and explainable fraud detection. The system processes transactions rapidly, enforces strict sequencing, maintains full auditability, and ensures long-term data retention.

3. Implementing an MLOps Platform for Seamless Transition from Concept to Deployment

Delivered a standardized, self-service MLOps platform that reduces friction between development and production. While not exclusively about Databricks, the platform leverages Databricks infrastructure for deployment pipelines, making it highly relevant to Databricks-powered workflows.

Why work with us

AI and Data Experts on board

Finished projects

We are part of a group of over 200 digital experts

Different industries we work with

Recognitions & awards

Databricks powers intelligence that keeps aviation moving forward

Aviation leaders choose Databricks to process millions of flight events daily, unifying aircraft telemetry, passenger data, weather systems, and operational metrics into a single lakehouse architecture for comprehensive air transport optimization.

- Predictive maintenance: Databricks processes real-time sensor data from engines, hydraulics, and avionics systems using machine learning models that analyze 500+ parameters per flight, enabling airlines to predict component failures and reduce maintenance costs.

- Smart passenger Flow: Databricks integrates booking systems, security checkpoints, and gate data to forecast passenger volume patterns, helping airports reduce average wait times and optimize staff allocation across terminals

- Dynamic operations management: Databricks real-time streaming processes weather, air traffic control, and aircraft positioning data to enable dynamic rescheduling algorithms that minimize delay cascades and reduce passenger disruption.

- Intelligent customer service: Databricks-powered generative AI chatbots analyze passenger history, preferences, and real-time flight status to deliver personalized support.

- Resilience analytics: Databricks analyzes historical cancellation patterns, weather correlations, and operational bottlenecks to identify root causes and improve scheduling resilience, reducing weather-related delays.

Databricks drives connected vehicle intelligence & manufacturing excellence

Leading automotive manufacturers and fleet operators rely on Databricks to process terabytes of vehicle sensor data, manufacturing telemetry, and supply chain information daily, enabling everything from autonomous driving development to predictive quality management.

- Fleet intelligence: Databricks analyzes real-time telematics from millions of connected vehicles, processing GPS, engine diagnostics, and driver behavior data to predict maintenance needs, reducing fleet downtime and extending vehicle lifecycles

- Connected experiences: Databricks creates unified customer profiles by combining in-vehicle behavior, service history, and preference data to deliver personalized infotainment, route optimization, and predictive services that increase customer satisfaction scores.

- Smart warranty management: Databricks correlates manufacturing quality data with field performance across vehicle populations, identifying failure patterns early to reduce warranty claims and guide product improvements

- Autonomous systems: Databricks manages petabytes of simulation data, camera feeds, LiDAR scans, and sensor telemetry to train machine learning models for autonomous driving, supporting continuous model validation and safety testing across diverse scenarios

- Agile Supply Chains: Databricks integrates supplier performance, inventory levels, and demand forecasting to optimize just-in-time manufacturing, reducing inventory carrying costs while maintaining production line uptime.

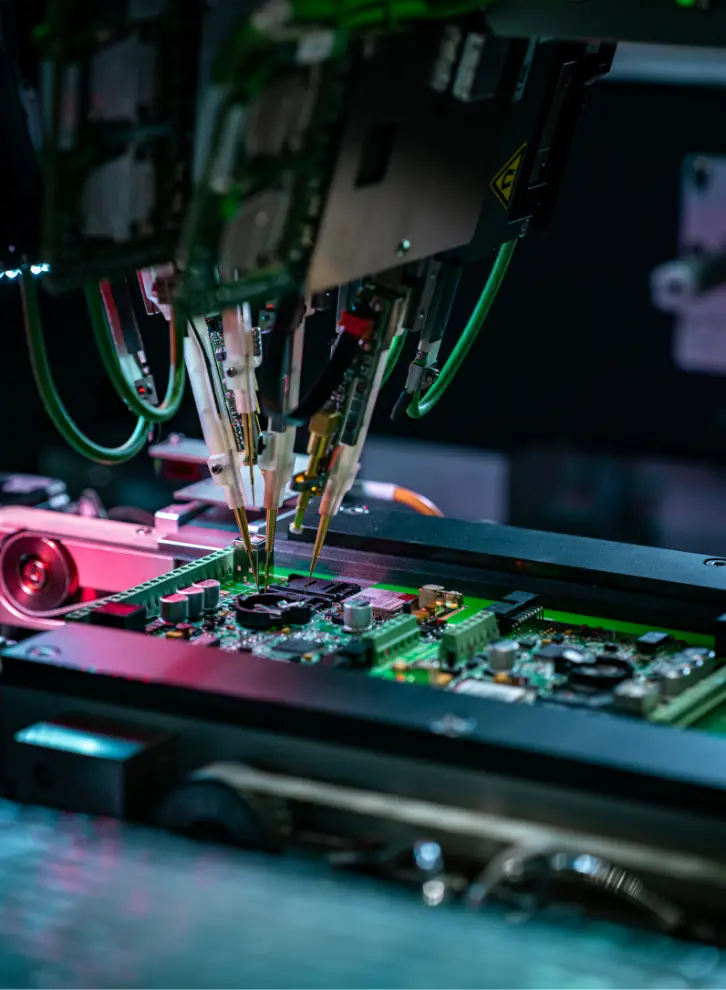

Databricks powers smart factory operations

Manufacturers trust Databricks to unify data from thousands of sensors, production systems, quality control equipment, and supply chain partners, creating intelligent factories that self-optimize and predict issues before they impact production.

- Intelligent Quality Control: Databricks processes real-time data from vision systems, spectrometers, and measurement devices to detect quality anomalies within milliseconds, reducing defect rates and preventing costly product recalls through early intervention

- Predictive Equipment Care: Databricks analyzes vibration, temperature, pressure, and acoustic data from 10,000+ industrial sensors to predict equipment failures, reducing unplanned downtime and extending asset lifecycles

- Dynamic Inventory Optimization: Databricks combines demand forecasting models with real-time supplier data and production schedules to maintain optimal inventory levels, reducing carrying costs while achieving order fulfillment rates

- Automated Process Intelligence: Databricks connects MES, ERP, and IoT systems to create closed-loop optimization that automatically adjusts production parameters, improving overall equipment effectiveness (OEE).

- Production Optimization: Databricks identifies bottlenecks by analyzing production flow data, worker productivity metrics, and material movement patterns, increasing throughput while reducing energy consumption per unit.

Databricks accelerates engineering innovation

Engineering teams choose Databricks to centralize and analyze massive datasets from CAD systems, finite element analysis, wind tunnels, and field testing, accelerating product development cycles from months to weeks through AI-powered insights.

- Unified simulation analytics: Databricks integrates results from ANSYS, MATLAB, SolidWorks, and other engineering tools into a single data lakehouse, enabling cross-simulation correlation analysis that reduces design iterations and accelerates time-to-market

- AI-accelerated design: Databricks machine learning models analyze thousands of design parameters and simulation outcomes to suggest optimal configurations, reducing prototype testing while improving performance metrics.

- Digital Twin development: Databricks processes real-time sensor data alongside historical performance metrics to create precise virtual models that predict asset behavior with high accuracy, enabling predictive maintenance and performance optimization

- Seamless team collaboration: Databricks provides secure, governed access to engineering data across global teams, enabling simultaneous design work that reduces development cycles by 35% while maintaining strict IP protection and compliance standards

Databricks powers intelligence that keeps aviation moving forward

Aviation leaders choose Databricks to process millions of flight events daily, unifying aircraft telemetry, passenger data, weather systems, and operational metrics into a single lakehouse architecture for comprehensive air transport optimization.

- Predictive maintenance: Databricks processes real-time sensor data from engines, hydraulics, and avionics systems using machine learning models that analyze 500+ parameters per flight, enabling airlines to predict component failures and reduce maintenance costs.

- Smart passenger Flow: Databricks integrates booking systems, security checkpoints, and gate data to forecast passenger volume patterns, helping airports reduce average wait times and optimize staff allocation across terminals

- Dynamic operations management: Databricks real-time streaming processes weather, air traffic control, and aircraft positioning data to enable dynamic rescheduling algorithms that minimize delay cascades and reduce passenger disruption.

- Intelligent customer service: Databricks-powered generative AI chatbots analyze passenger history, preferences, and real-time flight status to deliver personalized support.

- Resilience analytics: Databricks analyzes historical cancellation patterns, weather correlations, and operational bottlenecks to identify root causes and improve scheduling resilience, reducing weather-related delays.

Databricks drives connected vehicle intelligence & manufacturing excellence

Leading automotive manufacturers and fleet operators rely on Databricks to process terabytes of vehicle sensor data, manufacturing telemetry, and supply chain information daily, enabling everything from autonomous driving development to predictive quality management.

- Fleet intelligence: Databricks analyzes real-time telematics from millions of connected vehicles, processing GPS, engine diagnostics, and driver behavior data to predict maintenance needs, reducing fleet downtime and extending vehicle lifecycles

- Connected experiences: Databricks creates unified customer profiles by combining in-vehicle behavior, service history, and preference data to deliver personalized infotainment, route optimization, and predictive services that increase customer satisfaction scores.

- Smart warranty management: Databricks correlates manufacturing quality data with field performance across vehicle populations, identifying failure patterns early to reduce warranty claims and guide product improvements

- Autonomous systems: Databricks manages petabytes of simulation data, camera feeds, LiDAR scans, and sensor telemetry to train machine learning models for autonomous driving, supporting continuous model validation and safety testing across diverse scenarios

- Agile Supply Chains: Databricks integrates supplier performance, inventory levels, and demand forecasting to optimize just-in-time manufacturing, reducing inventory carrying costs while maintaining production line uptime.

Databricks powers smart factory operations

Manufacturers trust Databricks to unify data from thousands of sensors, production systems, quality control equipment, and supply chain partners, creating intelligent factories that self-optimize and predict issues before they impact production.

- Intelligent Quality Control: Databricks processes real-time data from vision systems, spectrometers, and measurement devices to detect quality anomalies within milliseconds, reducing defect rates and preventing costly product recalls through early intervention

- Predictive Equipment Care: Databricks analyzes vibration, temperature, pressure, and acoustic data from 10,000+ industrial sensors to predict equipment failures, reducing unplanned downtime and extending asset lifecycles

- Dynamic Inventory Optimization: Databricks combines demand forecasting models with real-time supplier data and production schedules to maintain optimal inventory levels, reducing carrying costs while achieving order fulfillment rates

- Automated Process Intelligence: Databricks connects MES, ERP, and IoT systems to create closed-loop optimization that automatically adjusts production parameters, improving overall equipment effectiveness (OEE).

- Production Optimization: Databricks identifies bottlenecks by analyzing production flow data, worker productivity metrics, and material movement patterns, increasing throughput while reducing energy consumption per unit.

Databricks accelerates engineering innovation

Engineering teams choose Databricks to centralize and analyze massive datasets from CAD systems, finite element analysis, wind tunnels, and field testing, accelerating product development cycles from months to weeks through AI-powered insights.

- Unified simulation analytics: Databricks integrates results from ANSYS, MATLAB, SolidWorks, and other engineering tools into a single data lakehouse, enabling cross-simulation correlation analysis that reduces design iterations and accelerates time-to-market

- AI-accelerated design: Databricks machine learning models analyze thousands of design parameters and simulation outcomes to suggest optimal configurations, reducing prototype testing while improving performance metrics.

- Digital Twin development: Databricks processes real-time sensor data alongside historical performance metrics to create precise virtual models that predict asset behavior with high accuracy, enabling predictive maintenance and performance optimization

- Seamless team collaboration: Databricks provides secure, governed access to engineering data across global teams, enabling simultaneous design work that reduces development cycles by 35% while maintaining strict IP protection and compliance standards

Databricks in Business

Unified lakehouse

Break down data silos with a single, scalable platform that combines the reliability of data warehouses with the flexibility of data lakes. This unified architecture speeds decision-making by providing consistent, high-quality data for analytics, BI, and AI—enabling faster business insights.

Scalable efficiency

Leverage a cloud-native, auto-scaling platform that optimizes resources and controls costs. Databricks scales seamlessly to accommodate growing data volumes and workloads, helping businesses maintain performance without overspending or operational complexity.

AI Integration

Accelerate innovation with built-in machine learning and AI capabilities. Databricks streamlines model development, deployment, and management, empowering organizations to quickly transform data into actionable intelligence and competitive advantage.

FAQ - Databricks Implementation

How long does it typically take to see our first meaningful results?

Most organizations achieve initial wins within 30-60 days. Quick wins include consolidating reporting dashboards, accelerating existing analytics queries, and enabling self-service data access. Complex AI initiatives typically show results in 90-120 days.

What’s required from our team during implementation?

Success requires dedicated resources: 1-2 data engineers for technical setup, a project sponsor for stakeholder alignment, and subject matter experts to define use cases. We provide implementation frameworks and best practices to minimize disruption to daily operations.

Can we start with a pilot project to prove value before full commitment?

Yes. Most enterprises begin with a focused pilot targeting specific business pain points. This approach allows you to demonstrate ROI, build internal expertise, and create a blueprint for broader rollout while minimizing risk and investment.

What happens during peak usage periods – will performance degrade?

Databricks auto-scales compute resources automatically based on demand. During peak periods, additional clusters spin up within minutes to maintain performance levels. You only pay for resources when needed, avoiding over-provisioning costs.

How does Databricks support advanced AI capabilities like generative AI and large language models?

Databricks provides optimized infrastructure for training and serving large models, including GPU clusters, distributed training capabilities, and model serving endpoints. Built-in vector databases enable retrieval-augmented generation (RAG) for enterprise AI applications.