July 21, 2023

Not only GPT. What LLMs can you choose from?

Author:

CSO & Co-Founder

Reading time:

7 minutes

ChatGPT – which sparked the frenzy around Large Language Models – only launched in November 2022, but the stats prove it is just the beginning. There are solid reasons for us to believe that harnessing Generative AI will go from general-purpose use cases to more specific-oriented ones: already nearly one in ten (8.3%) of machine learning teams have already deployed an LLM application into production (state for April 2023).

However, before we go deeper and present what, how, and why Large Language Models, we must list some stats showing the obstacles to using Generative AI in improving internal processes.

According to the Arize AI survey, team leaders see three main barriers that stop companies from implementing Gen AI tools on a large scale:

- Data privacy

The protection of proprietary data is the primary obstacle to the widespread deployment of Large Language Models (LLMs). Many teams face challenges in this area, emphasizing the importance of addressing data privacy concerns.

Read more: Privacy Concerns in AI-Driven Document Analysis: How to manage the confidentiality?

- Reliability

The second significant barrier is the accuracy of LLM responses and the occurrence of “hallucinations” (generating incorrect or nonsensical information). This highlights the need for improved LLM observability tools to fine-tune models and troubleshoot issues.

- Costs

Cost is another concern ML teams mention regarding LLM adoption. Implementing and utilizing large language models can be expensive, making it a significant consideration for organizations.

Unlock unlimited LLM’s possibilities with Generative AI development company. Reach out to us and transform your business with cutting-edge technology.

Open AI leads the pack, but the competition doesn’t sleep

Artificial intelligence (AI) is evolving at a breakneck pace, with Large Language Models (LLMs) making significant strides. Even though OpenAI currently dominates the field of LLMs, with 83.0% of ML teams considering or already working with their GPT models, other significant players such as Google or Microsoft are trying to make the mark, not to mention open-source solutions that are ready to grab some bite.

Source: Arize AI Survey

Large Language Models Explanation

Large Language Model (LLM) refers to an advanced AI model that utilizes deep learning techniques to understand the nuances of natural language. These models are trained on massive amounts of data, enabling them to learn natural language’s complex patterns, relationships, and nuances. Thanks to it, they can understand and generate contextually relevant text.

Understanding and generating natural language makes them capable of handling various tasks, including natural language processing, natural language generation, text completion, translation, summarization, question-answering, and more. They have potential applications in chatbots, virtual assistants, content creation, sentiment analysis, recommendation systems, and many other areas that involve processing and generating textual data.

How to choose the right LLM?

Commercial models made by OpenAI are highly popular due to their convenience and availability. OpenAI’s API Key is paid for the tokens’ usage.

What’s a token? You can think of tokens as pieces of words used for natural language processing. For English, 1 token is approximately 4 characters or 0.75 words. As a point of reference, the collected works of Shakespeare are about 900,000 words or 1.2M tokens.

OpenAI API and other commercial models typically offer advanced, market-proven capacities, yet for those who are seeking more control and flexibility, there is now a new alternative which is open-source Large Language Models.

Open-source Large Language Models provide – on the one hand – significantly higher control over the data used in training the language model (there is a possibility of training the model on internal data) and – on the other – the possibility to change the model architecture.

These qualities make open-source LLMs much more flexible than paid API and flexible enough to address more specific use cases, especially since none of the data is shared with a third party. Open-source LLMs provide better security measures as a result.

Cutting to the chase, which option will be better? The answer is – as always – “it depends,” and the whole discussion is like neverending tech beef “buy vs. build.”

Paid API LLM model is definitely a go-to direction for those who want to instantly implement a general-purpose LLM into their workflows, avoiding complex engineering. The time-to-value – in that case – is cut to a minimum, and even when the initial results are far from the expected, they can be slowly optimized by polishing initial prompts. The drawback lies deeper: the architecture of the paid API is set in stone as it is when we talk about any other SaaS solutions.

If you like to know what is going on under the hood, an open-source solution may be a better option. With the right expertise, you could train an open-source model for very niche use cases while maintaining full control over the training data.

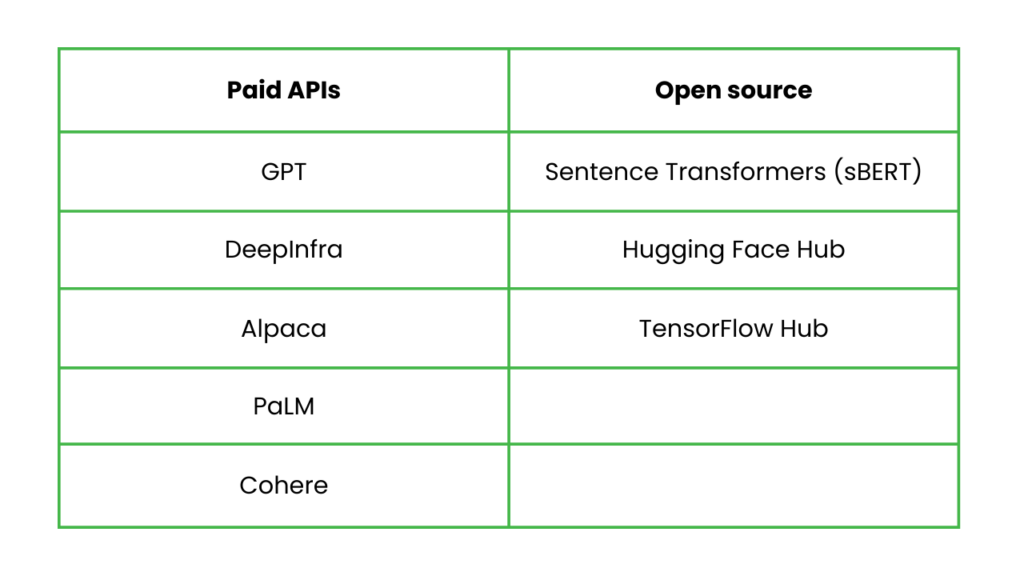

The most popular Large Language Models (Paid API LLM and Open-Source)

There are a plethora of open-source and commercial language models that are deployable on-premise or in a private cloud, which translates to fast business adoption and robust cybersecurity.

GPT-based models (OpenAI)

GPT-4 is considered another milestone in Natural Language Processing that came out this March. It is an improvement on the GPT-3.5 Turbo that powers ChatGPT. However, these two models are not the only ones that were developed and used by OpenAI. How does GPT-4 – which is the jewel in the OpenAI crown – differ from other iterations of GPT models?

GPT-3

GPT-3, released in 2019, was based on – of course – GPT-2. Like its predecessor, it can generate strings of complex language predicting the next most likely word in the sequence. GPT-3 was trained on 175 billion parameters, mainly scraped from Common Crawl, a dataset that, at the time of GPT -3’s release, encompassed 2.6 billion stored web pages. GPT-3’s capabilities made it the most advanced LLM outperforming Google’s BERT. BERT could understand and analyze text, but GPT-3 can also generate it from scratch.

After release, multiple versions of GPT-3 arise, but GPT-3 Davinci is the most stable and popular.

GPT-3.5 Turbo

GPT-3.5 Turbo was released on March 1st, 2023, based on an improved combination of GPT 3.5 and GPT-3 Davinci.

The main differentiator of GPT-3.5 Turbo was the Reinforcement Learning from Human Feedback (RLHF) that OpenAI used while developing this model. The method involves human feedback ‘rating’ the model’s performance.

GPT-4

GPT-4, although its use cases are very similar to its procedures, is proved to be much more accurate than GPT-3 and GPT -3.5 Turbo (it was even able to pass the Bar Exam, while ChatGPT achieved slightly better than a 50%, and GPT-3 Davinci has failed)

This model has ‘multimodality’ built-in, which means it can utilize multiple forms of sensory perception. GPT-4 can accept a prompt of text, which—parallel to the text-only setting—lets the user specify any vision or language task. Specifically, it generates text outputs (natural language, code, etc.) given inputs consisting of interspersed text and images.

Source: Chicago-Kent College of Law

LaMDA (Google)

LaMDA, like other recent language models, such as BERT and GPT-3, LaMDA is based on the Transformer architecture. This architecture allows the model to comprehend a large number of words, such as a sentence or paragraph, by understanding their relationships and predicting the next words in the sequence. Google states that the main difference between LaMDA and other language models is its training on dialogue data. Through this training process, LaMDA was supposed to understand the subtle nuances that distinguish open-ended conversations from other types of language usage.

BLOOM (Hugging Face)

BLOOM is a Large Language Model (LLM) able to output coherent human-like text in 46 languages and 13 programming languages.

The architecture of BLOOM is essentially similar to GPT3 (auto-regressive model for next token prediction).

LLaMA & LLaMA 2

Similar to other extensive language models, LLaMA operates by receiving a sequence of words as input and then predicting the next word, allowing it to generate text recursively. For the model training, the providers selected text from the 20 languages spoken by the highest number of people, with particular emphasis on languages utilizing Latin and Cyrillic alphabets.

Llama 2 is the second version of the open source language model from Meta. It is based on a transformer architecture, was trained on 2 trillion tokens, and has double the context length than Llama 1.

Category: