Data Engineering Services

Providing complete assistance to our clients with data engineering services. From raw data to actionable information using Data Lakes, Delta Tables, Streaming applications, Data Warehouses, and DataOps.

Data Engineering Services

How We Support Clients in Data Engineering?

Our Data Engineering practice specializes in designing and constructing robust systems for data ingestion, collection, storage, and analysis. This discipline is crucial across virtually all industries, underpinning many facets of data science.

Our Data Engineers facilitate seamless access to data, performing sophisticated analyses on raw data to develop advanced Data Pipelines and Data Platforms. In the absence of advanced data engineering, making sense of the vast quantities of data available to organizations would be exceedingly challenging. By leveraging cutting-edge technologies and methodologies, we ensure that data is not only Accessible but also Actionable, empowering companies to make informed decisions and maintain a competitive edge in their respective markets.

Who are our Data Engineering consultants?

Data engineers possess expertise in a variety of programming languages essential to data platform development. They are responsible for constructing data pipelines that facilitate the seamless transfer of data between different systems. Additionally, they handle the transformation of data from one format to another, enabling data scientists to access and analyze data from diverse sources efficiently.

By building and maintaining these pipelines, data engineers ensure that data is:

- consistently available,

- properly formatted,

- and ready for complex analytical processes.

Their work is crucial for integrating disparate data systems, thereby supporting comprehensive data analysis and fostering data-driven decision-making across organizations.

What are Data Engineering Services?

Addepto’s Data Engineering services are designed to elevate your business to the next level in data usage, management, and automation. With our advanced automated data pipelines, you can focus on extracting valuable insights without the hassle of manual data handling.

- Our expert team supports global enterprises, including Porsche, RR, and SITA, in developing sophisticated data processing pipelines.

- We collaborate with our clients to extract critical business information, manage data efficiently, and ensure the highest standards of data quality and availability.

Our strategic project approach and comprehensive data engineering services empower companies to make informed decisions.

Explore our suite of data-related services (case studies) and discover how Addepto solutions can transform your business.

Audit and future-proof, scaleable dataflows

Our Data Engineers work closely with Data Scientists as part of integrated teams. Because of this hands-on collaboration, we understand both the technical demands of building data systems and the real-world needs of analysis and modeling. This helps us avoid disconnects between data engineering and data science, leading to smoother, faster project delivery.

We design and build the data infrastructure your business depends on—whether you’re a startup or a large company. This includes setting up data lakes, optimizing data warehouses, and managing complex data pipelines across industries.

We choose the right tools for each job, working with technologies like AWS (S3, Glue, Redshift, EMR), Apache Airflow, Snowflake, Databricks, Apache Spark, and Kafka. Our work is shaped by your goals, timelines, and budget—not by one-size-fits-all solutions. We focus on doing what works best for your specific case, backed by experience from many successful, real-world projects.

Access the full case study here.

Clients that trusted us

Our Data Engineering

development process

Understanding business needs and technical requirements

Addepto is an experienced Data Engineering company. We help companies all over the world make the most of the data they process every day.

Firstly, our data engineering team carries out the workshops and discovery calls with potential end-users. Then, we get all the necessary information from the technical departments.

Let’s discuss a data engineering solution for your business!

Analysis of existing and future data sources

At this stage, it is essential to go through current data sources to maximize the value of data. You should identify multiple data sources from which structured and unstructured data may be collected.

During this step, our experts will prioritize and assess them.

Building and implementing a Data Lake

Data Lakes are the most cost-effective alternatives for storing data. A data lake is a data repository system that stores raw and processed structured and unstructured data files. A system like stores flat, source, transformed, or raw files.

Data Lakes could be established or accessed using specific tools such as Hadoop, S3, GCS, or Azure Data Lake on-premises or in the cloud.

Designing and implementing Data Pipelines

After selecting data sources and storage, it is time to begin developing data processing jobs.

These are the most critical activities in the data pipeline because they turn data into relevant information and generate unified data models.

Automation and deployment

The next step is one of the most important parts in data development consulting – DevOps. Our team develops the right DevOps strategy to deploy and automate the data pipeline.

This strategy plays an important role as it helps to save a lot of time spent, as well as take care of the management and deployment of the pipeline.

Testing

Testing, measuring, and learning — are important at the last stage of the Data Engineering Consulting Process.

DevOps automation is vital at this moment.

Why work with us

AI Experts on board

Finished projects

We are part of a group of over 200 digital experts

Different industries we work with

Recognitions & awards

Our Data Engineering Tools and Technologies

The Addepto team leverages the most advanced tools and technologies available in the market. To deliver stable and high-quality software, we collaborate with leading cloud solution providers, including AWS, Azure, and GCP.

Our data engineering team utilizes a comprehensive suite of tools to ensure robust data management:

- Data Platforms: We work with various data platforms such as Databricks, Cloudera, and Snowflake to support large-scale data processing and storage solutions.

- Data Observability: Tools like Datadog, Grafana, and Prometheus help us monitor and gain insights into data pipelines, ensuring they run smoothly and efficiently.

- DataOps: We employ DataOps tools like Apache NiFi, Airflow, and dbt (data build tool) to automate, orchestrate, and manage data workflows and pipelines.

- Data Quality Testing: To maintain high data quality, we use testing tools such as Great Expectations, Deequ, and Talend, which allow us to detect and address data issues proactively.

- Data Transformation: We use tools like Apache Beam, Talend, and Informatica for data transformation, ensuring that data is properly formatted and ready for analysis.

Additionally, we are deeply committed to the open-source community and technologies, ensuring our clients benefit from some of the most popular and effective data engineering software without incurring additional costs. This approach allows us to provide robust, cost-efficient solutions that meet the diverse needs of our clients.

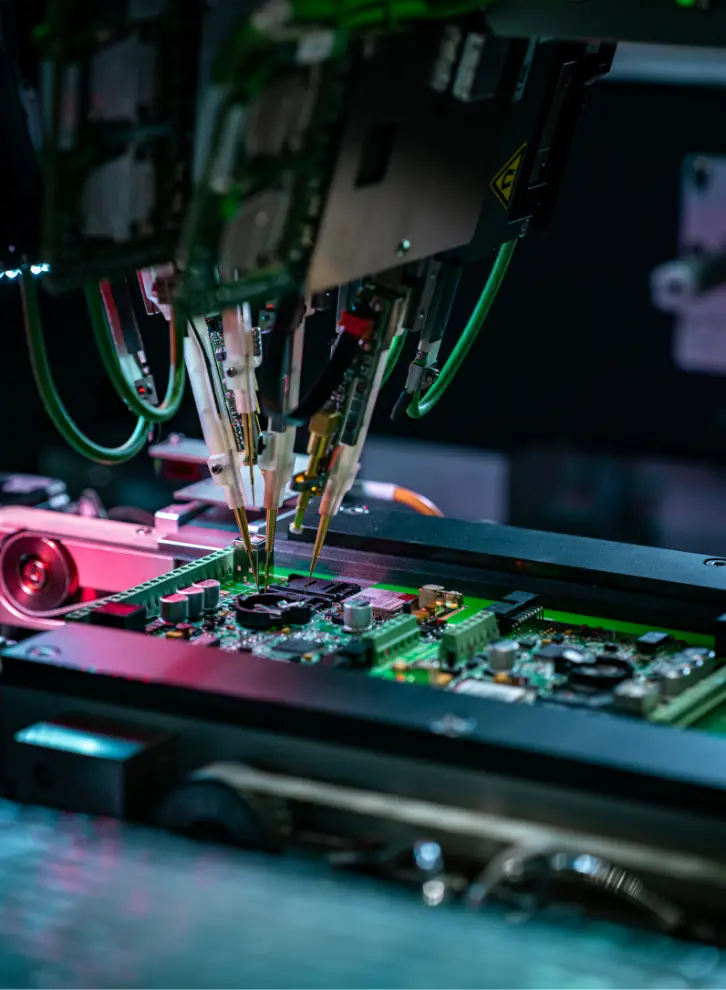

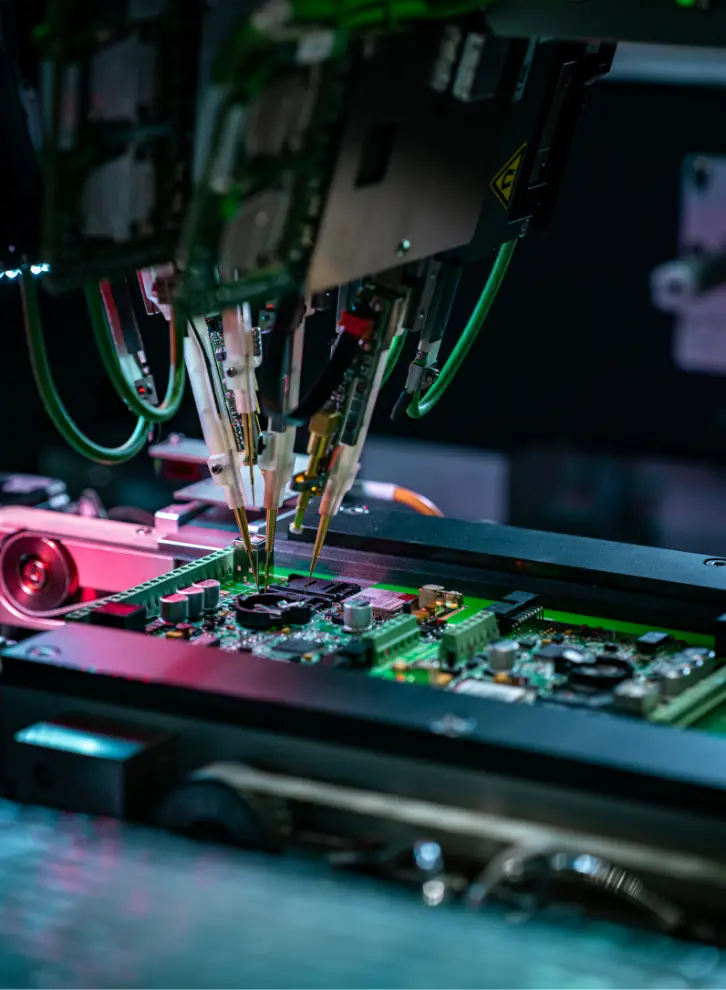

Product Traceability System for a big manufacturing company

Manufacturers face complex operations, expensive downtime, and strict quality standards. We help by building tailored data systems that support smooth production and smarter decision-making.

- Predictive Maintenance – Using sensor data and maintenance history, we help identify wear and failure patterns early—keeping machines running and maintenance costs down.

- Supply Chain Visibility – We link data across procurement, inventory, and production to give planners a clear view of the entire supply chain and improve coordination.

- Quality Control – Real-time monitoring of production data allows for early detection of anomalies, helping reduce defects and ensure product consistency.

- Production Optimization – By centralizing data from every stage of manufacturing, we help teams identify inefficiencies, eliminate bottlenecks, and increase throughput.

Customer Data Platform implementation

In retail, staying competitive means reacting quickly to shifting demand, customer expectations, and supply challenges. Our systems help retailers make smart, timely decisions.

- Customer Profiles and Personalization – We create unified customer views by combining data from online and in-store touchpoints, enabling better targeting and tailored experiences.

- Inventory and Forecasting – Sales trends, external signals, and historical data feed into forecasting models that help keep the right products on the shelves—no more guesswork.

- Dynamic Pricing – We build flexible pricing systems that adjust based on real-time demand, competitor moves, and buyer behavior – maximizing margin while staying competitive.

- Supply Chain Monitoring – Our tools provide continuous tracking of inventory and shipments, helping avoid stockouts, delays, and over-ordering.

Data Engineering in Aviation Industry

Airlines operate under tight safety, scheduling, and cost constraints. We build aviation-grade data platforms to support safer flights and more efficient operations.

- Predictive Aircraft Maintenance – We use sensor streams and maintenance logs to detect signs of wear or failure before they lead to grounded aircraft – improving safety and uptime.

- Fuel Efficiency – Flight data, routes, and weather models come together to pinpoint where airlines can save fuel and reduce emissions.

- Air Traffic and Scheduling – Our systems help operators better manage flight paths and runway use, minimizing delays and improving airspace utilization.

- Passenger Experience – We combine customer feedback with operational data to identify what matters most to passengers and how to improve their journey.

Investment and Financial Services

Fast, accurate data is essential in financial services. We build solid, scalable infrastructure to support trading, compliance, and risk management.

- Market Data Infrastructure – We handle ingestion and organization of high-volume financial data from varied sources, ensuring it’s always clean, accessible, and analysis-ready.

- Trading Systems – Our low-latency pipelines ensure that pricing and trade data flows quickly and reliably – essential for algorithmic trading and real-time analytics.

- Compliance Reporting – We automate data collection and reporting processes, helping firms meet regulatory demands without manual overhead.

- Risk Management – Our solutions bring together portfolio, counterparty, and market data so risk teams can act on a complete, real-time picture.

Product Traceability System for a big manufacturing company

Manufacturers face complex operations, expensive downtime, and strict quality standards. We help by building tailored data systems that support smooth production and smarter decision-making.

- Predictive Maintenance – Using sensor data and maintenance history, we help identify wear and failure patterns early—keeping machines running and maintenance costs down.

- Supply Chain Visibility – We link data across procurement, inventory, and production to give planners a clear view of the entire supply chain and improve coordination.

- Quality Control – Real-time monitoring of production data allows for early detection of anomalies, helping reduce defects and ensure product consistency.

- Production Optimization – By centralizing data from every stage of manufacturing, we help teams identify inefficiencies, eliminate bottlenecks, and increase throughput.

Customer Data Platform implementation

In retail, staying competitive means reacting quickly to shifting demand, customer expectations, and supply challenges. Our systems help retailers make smart, timely decisions.

- Customer Profiles and Personalization – We create unified customer views by combining data from online and in-store touchpoints, enabling better targeting and tailored experiences.

- Inventory and Forecasting – Sales trends, external signals, and historical data feed into forecasting models that help keep the right products on the shelves—no more guesswork.

- Dynamic Pricing – We build flexible pricing systems that adjust based on real-time demand, competitor moves, and buyer behavior – maximizing margin while staying competitive.

- Supply Chain Monitoring – Our tools provide continuous tracking of inventory and shipments, helping avoid stockouts, delays, and over-ordering.

Data Engineering in Aviation Industry

Airlines operate under tight safety, scheduling, and cost constraints. We build aviation-grade data platforms to support safer flights and more efficient operations.

- Predictive Aircraft Maintenance – We use sensor streams and maintenance logs to detect signs of wear or failure before they lead to grounded aircraft – improving safety and uptime.

- Fuel Efficiency – Flight data, routes, and weather models come together to pinpoint where airlines can save fuel and reduce emissions.

- Air Traffic and Scheduling – Our systems help operators better manage flight paths and runway use, minimizing delays and improving airspace utilization.

- Passenger Experience – We combine customer feedback with operational data to identify what matters most to passengers and how to improve their journey.

Investment and Financial Services

Fast, accurate data is essential in financial services. We build solid, scalable infrastructure to support trading, compliance, and risk management.

- Market Data Infrastructure – We handle ingestion and organization of high-volume financial data from varied sources, ensuring it’s always clean, accessible, and analysis-ready.

- Trading Systems – Our low-latency pipelines ensure that pricing and trade data flows quickly and reliably – essential for algorithmic trading and real-time analytics.

- Compliance Reporting – We automate data collection and reporting processes, helping firms meet regulatory demands without manual overhead.

- Risk Management – Our solutions bring together portfolio, counterparty, and market data so risk teams can act on a complete, real-time picture.

Wherever you are, we can offer a complete, end-to-end data engineering solution

Modern Data Pipelines

Designing, constructing, and implementing end-to-end automated data pipelines of production quality.

The Addepto data engineering consulting team has strong experience in the implementation of automated data pipelines, both on-premises and in the cloud.

Data Preparation and ETL/ELT

Data preparation, processing, and ETL/ELT (extract, transform (load), load (transform)) help in the processing, transformation, and loading of data into the required data model for business reporting and advanced analytics. Our Data Engineering team has developed such pipelines for many business departments such as Finance, Sales, Supply Chain, and others.

Data Lake Implementation

Data Lakes are the most powerful and creative option for cost-effective data storage and quick processing. Adoption of Data Lakes in your company may support you in expanding the business data architecture. Addepto has used Data Lake solutions to solve a variety of client business challenges such as Product Traceability, Customer Data Platforms, IoT data reporting, and others.

Cloud Data Architecture

Today, it is essential to build and design flexible and highly accessible business data architectures. Our Data Architects can help your business get to the next level in terms of data analytics foundation by combining experience from several large enterprises. Try our Big Data Engineering Services!

Data Engineering FAQ

How big tech companies use data engineering?

Many e-commerce giants use the power of data to create value for their businesses. Specific data allows you to attract potential customers and thereby significantly increase business profits.

Amazon personalizes every interaction by using a large amount of client data.

Data is being used by the company to optimize pricing, advertising, the supply chain, and even to decrease fraud.

Nordstrom’s data engineers have developed a system for monitoring customer habits and behavior using Wi-Fi.

The data obtained allowed the company to study the purchasing trends of its customers, which resulted in the optimization of personalized data and overall improved customer service.

What is the difference between Data Engineering and Data Science?

Data science is an interdisciplinary field that uses methods and techniques from statistics, applied science, and computer science to analyze organized and unstructured data to provide useful insights and information.

Data engineering is responsible for creating a pipeline or procedure to transport data from one instance to another.

Do I need Data Engineering?

We are surrounded by data. This resource may be used for a variety of purposes, including customer service, market research, and, of course, sales. Developing sophisticated data systems for businesses is quickly becoming necessary.

You should hire data engineering consulting experts to organize your system and use the data to improve your business performance.

What is a Data Pipeline?

A data pipeline is a sequence of data processes that extract, process, and load data from one system to another.

Data pipelines are classified into two types: batch and real-time.

What is the future of Data Engineering?

The following four areas were highlighted as technological shifts in data engineering of the future:

- Increased connectivity between data sources and the data warehouse.

- Self-service analytics with intelligent tools made possible by data engineering

- Automation of Data Science functions

- Hybrid data architectures spanning on-premises and cloud environments

What does a Data Engineer do?

Data engineers are responsible for the design, development, and maintenance of the data platform, which includes the data infrastructure, data processing applications, data storage, and data pipelines.

In a large company, data engineers are usually divided into teams that focus on different parts of the data platform: Data warehouse & pipelines, Data infrastructure, Data applications.

Why is Data Engineering so important?

To establish a genuinely effective analytics program, companies must intentionally invest in developing their data engineering expertise.

This includes building a solid basis for data management-identifying gaps and quality concerns while improving data collecting.

Companies that actively invest in engineering professionals will get the most out of data in the coming years.

What kind of services do you offer in the area of Data Engineering?

We offer comprehensive data engineering services that encompass the entire data lifecycle.

Our services include:

- Data Lakes: Designing and implementing cost-effective storage solutions for raw and processed data.

- Delta Tables: Utilizing Delta Lake technology for efficient and reliable data storage.

- Streaming Applications: Developing real-time data processing applications.

- Data Warehouses: Building scalable data warehouses for structured data storage and retrieval.

- DataOps: Implementing automated processes for data integration, quality assurance, and deployment.

How do you secure Data and privacy in Data Engineering?

We prioritize data security and privacy by employing industry-leading practices and technologies:

- Data Encryption: We encrypt data both in transit and at rest to protect it from unauthorized access.

- Access Controls: Implementing strict access control mechanisms to ensure that only authorized personnel can access sensitive data.

- Compliance: Adhering to relevant data protection regulations (e.g., GDPR, CCPA) to ensure compliance.

- Monitoring and Auditing: Utilizing advanced monitoring tools to track data access and modifications, and conducting regular audits to identify and address potential vulnerabilities.

Can you consult only on some parts of my project of Data Engineering?

Yes, we offer flexible consulting services tailored to your specific needs. Whether you require assistance with a particular phase of your data project, such as data pipeline design, data lake implementation, or data quality testing, we can provide expert guidance and support to help you achieve your goals.